Artificial intelligence (AI) is a fast-evolving family of technologies that can bring a wide array of economic and social benefits across various sectors and activities. However, AI also poses new risks and challenges for human rights, safety, and democracy. To address these issues, the European Union’s AI Act has proposed the first ever legal framework on AI, the Artificial Intelligence Act (AI Act), which aims to promote trustworthy and human-centric AI in Europe and beyond.

The AI Act classifies AI systems into four categories of risk: unacceptable, high, limited, and minimal. Unacceptable risk AI systems are those that violate fundamental rights or pose a clear threat to the safety or security of people, such as social scoring systems or real-time remote biometric identification systems in public spaces. These systems are prohibited under the AI Act. High-risk AI systems are those that are used in critical sectors or contexts, such as health, education, justice, law enforcement, or transport, and could cause significant harm to people or society.

These systems are subject to strict obligations before they can be placed on the market or put into service, such as conformity assessment, transparency, human oversight, and accuracy requirements. Limited-risk AI systems are those that pose specific transparency risks, such as chatbots or deepfakes, and require users to be informed that they are interacting with or exposed to an AI system. Minimal-risk AI systems are those that pose no or negligible risk to people or society, such as video games or spam filters, and are not subject to any specific obligations under the AI Act. Want to know more:

Categorizing Generative AI Models Under the European Union’s AI Act

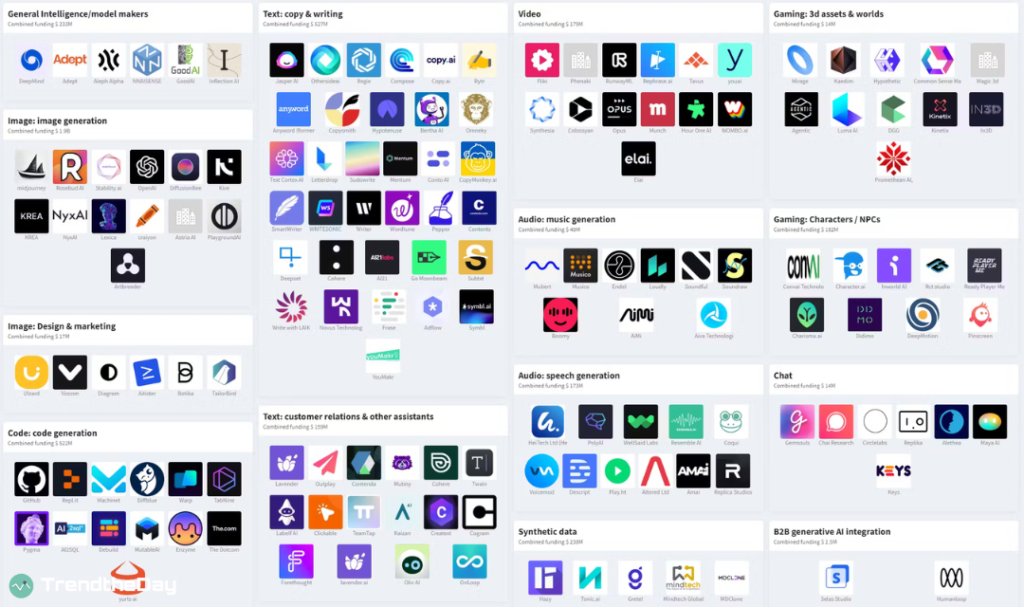

Generative AI models are a type of AI system that can create novel content, such as text, images, audio, or video, based on some input data or parameters. Generative AI models can have various applications and benefits, such as enhancing creativity, generating art or entertainment, improving communication or education, or supporting research or innovation. However, generative AI models can also pose significant risks and challenges, such as infringing intellectual property rights, spreading misinformation or disinformation, manipulating public opinion or behaviour, or undermining trust or security.

The AI Act does not explicitly define or mention generative AI models as a category of AI system. However, depending on their intended use and potential impact, generative AI models could fall under different risk categories and be subject to different obligations under the European Union’s AI Act. For example:

- A generative AI model that creates realistic images of human faces for identity verification purposes could be considered a high-risk AI system, as it is used in a critical context and could cause harm to people’s privacy or security. This system would have to comply with the strict requirements for high-risk AI systems under the AI Act.

- A generative AI model that creates fake news articles or videos for political propaganda purposes could be considered an unacceptable risk AI system, as it violates fundamental rights and poses a clear threat to democracy. This system would be prohibited under the European Union’s AI Act.

- A generative AI model that creates chatbot responses for customer service purposes could be considered a limited-risk AI system, as it poses specific transparency risks. This system would have to inform users that they are interacting with an AI system under the AI Act.

- A generative AI model that creates artistic paintings or music for entertainment purposes could be considered a minimal-risk AI system, as it poses no or negligible risk to people or society. This system would not be subject to any specific obligations under the European Union’s AI Act.

The EU’s AI Act is set to become the world’s first comprehensive legal framework for artificial intelligence. It aims to balance the promotion of innovation and excellence with the protection of values and rights in the development and deployment of AI systems. The impact of the European Union’s AI Act on generative AI models will depend on their use and risk level, but it will also create new opportunities and challenges for developers and users of such technologies. The EU’s approach to regulating AI could also influence other regions and countries in shaping their own policies and standards for trustworthy and human-centric AI.

https://trendtheday.com/how-generative-ai/

Ensuring Compliance with the AI Act: Steps for Companies

Achieving compliance with the AI Act is a multifaceted undertaking, demanding companies to adopt a proactive and comprehensive approach to AI governance. Drawing insights from web search results, here are some potential strategies for companies to ensure adherence:

- Companies must cultivate a global perspective on AI compliance, encompassing regulations not only within the EU but also in other regions and countries where they operate. Continuous monitoring of changes and updates in the evolving AI regulatory landscape is essential. Assessing their impact on existing and planned AI use cases is equally critical.

- Active participation in the formulation and application of AI standards and best practices, both at the industry and international levels, is crucial. Collaboration with pertinent stakeholders, including regulatory bodies, customers, partners, and civil society, facilitates the exchange of insights and experiences in the realm of AI compliance.

- Companies should establish a systematic mechanism for identifying, categorizing, and documenting their AI use cases based on their associated risk levels and corresponding obligations mandated by the AI Act. This system should also incorporate a robust framework for tracking and verifying the compliance status of AI systems throughout their lifecycle.

- Providing ample resources and support for AI/ML teams is essential to meet the requirements of the AI Act. These requirements encompass conformity assessment, transparency, human oversight, and accuracy. Investments in tools and technologies that streamline the compliance process, such as data quality management, explainability methods, and bias detection and mitigation tools, are instrumental in ensuring adherence.

- Companies should cultivate an organizational culture centered on trust and responsibility among AI/ML teams. Encouraging teams to uphold the ethical and legal principles of trustworthy and human-centric AI is imperative. Recognizing and rewarding exemplary compliance practices and performance should be coupled with swift and effective measures to address any compliance issues or gaps.

In a landscape of evolving regulations, companies must proactively navigate the complexities of AI compliance to harness the full potential of artificial intelligence while upholding ethical and legal standards.